Nvidia’s $46.7B Q2 proves the platform, but its next fight is ASIC economics on inference

Behind Nvidia's strong quarterlyu results are ASICs gaining ground in key Nvidia segments, challenging their growth in the quarters to come.

Behind Nvidia's strong quarterlyu results are ASICs gaining ground in key Nvidia segments, challenging their growth in the quarters to come.

By using two co-evolving AI models, the R-Zero framework generates its own learning curriculum, moving beyond the need for labeled datasets.

OpenAI's new speech model, gpt-realtime, hopes that its more naturalistic voices would make enterprises use more AI generated voices in applications.

Editor’s note: Target set out to modernize its digital search experience to better match guest expectations and support more intuitive discovery across millions of products. To meet that challenge, they rebuilt their platform with hybrid search powered by filtered vector queries and AlloyDB AI. The result: a faster, smarter, more resilient search experience that’s already improved product discovery relevance by 20% and delivered measurable gains in performance and guest satisfaction. The search bar on Target.com is often the first step in a guest’s shopping journey. It’s where curiosity meets convenience and where Target has the opportunity to turn a simple query into a personalized, relevant, and seamless shopping experience. Our Search Engineering team takes that responsibility seriously. We wanted to make it easier for every guest to find exactly what they’re looking for — and maybe even something they didn’t know they needed. That meant rethinking search from the ground up. We set out to improve result relevance, support long-tail discovery, reduce dead ends, and deliver more intuitive, personalized results. As we pushed the boundaries of personalization and scale, we began reevaluating the systems that power our digital experience. That journey led us to reimagine search using hybrid techniques that bring together traditional and semantic methods and are backed by a powerful new foundation built with AlloyDB AI. Hybrid search is where carts meet context Retail search is hard. You’re matching guest expectations, which can sometimes be expressed in vague language, against an ever-changing catalog of millions of products. Now that generative AI is reshaping how customers engage with brands, we know traditional keyword search isn’t enough. That’s why we built a hybrid search platform combining classic keyword matching with semantic search powered by vector embeddings. It’s the best of both worlds: exact lexical matches for precision and contextual meaning for relevance. But hybrid search also introduces technical challenges, especially when it comes to performance at scale. Fig. 1: Hybrid Search blends two powerful approaches to help guests find the most relevant results Choosing the right database for AI-powered retrieval Our goals were to surface semantically relevant results for natural language queries, apply structured filters like price, brand, or availability, and deliver fast, personalized search results even during peak usage times. So we needed a database that could power our next-generation hybrid search platform by supporting real-time, filtered vector search across a massive product catalog, while maintaining millisecond-level latency even during peak demand. We did this by using a multi-index design that yields highly relevant results by fusing the flexibility of semantic search with the precision of keyword-based retrieval. In addition to retrieval, we developed a multi-channel relevance framework that dynamically modifies ranking tactics in response to contextual cues like product novelty, seasonality, personalization and other relevance signals. Fig. 2: High level architecture of the services benign built within Target We had been using a different database for similar workloads, but it required significant tuning to handle filtered approximate nearest neighbor (ANN) search at scale. As our ambitions grew, it became clear we needed a more flexible, scalable backend that also provided the highest quality results with the lowest latency. We took this problem to Google to explore the latest advancements in this area, and of course, Google is no stranger to search! AlloyDB for PostgreSQL stood out, as Google Cloud had infused the underlying techniques from Google.com search into the product to enable any organization to build high quality experiences at scale. It also offered PostgreSQL compatibility with integrated vector search, the ScaNN index, and native SQL filtering in a fully managed service. That combination allowed us to consolidate our stack, simplify our architecture, and accelerate development. AlloyDB now sits at the core of our search system to power low-latency hybrid retrieval that scales smoothly across seasonal surges and for millions of guest search sessions every day while ensuring we serve more relevant results. aside_block <ListValue: [StructValue([('title', 'Build smarter with Google Cloud databases!'), ('body', <wagtail.rich_text.RichText object at 0x3e9750fe99d0>), ('btn_text', ''), ('href', ''), ('image', None)])]> Filtered vector search at scale Guests often search for things like “eco-friendly water bottles under $20” or “winter jackets for toddlers.” These queries blend semantic nuance with structured constraints like price, category, brand, sizes or store availability. With AlloyDB, we can run these hybrid queries that combine vector similarity and SQL filters easily without sacrificing speed or relevance. Thanks to recent innovations in AlloyDB AI, including optimized filtered vector search and adaptive query filtering, we’ve seen: up to 10x faster execution compared to our previous stack product discovery relevance improved by 20% halved the number of “no results” queries These improvements have extended deeper into our operations. We’ve reduced vector query response times by 60%, which resulted in a significant improvement in the guest experience. During high-traffic events, AlloyDB has consistently delivered more than 99.99% uptime, providing us with the confidence that our digital storefront can keep pace with demand when it matters most. Since search is an external –facing, mission-critical service, we deploy multiple AlloyDB clusters across multiple regions, allowing us to effectively achieve even higher effective reliability. These reliability gains have also led to fewer operational incidents, so our engineering teams can devote more time to experimentation and feature delivery. Fig 3: AlloyDB AI helps Target combine structured and unstructured data with SQL and Vector search. For example, this improved search experience now delivers more seasonally relevant styles (ie. Long Sleeves) on Page One! AlloyDB’s cloud-first architecture and features give us the flexibility to handle millions of filtered vector queries per day and support thousands of concurrent users – no need to overprovision or compromise performance. Building smarter search with AlloyDB AI What’s exciting is how quickly we can iterate. AlloyDB’s managed infrastructure and PostgreSQL compatibility let us move fast and experiment with new ranking models, seasonal logic, and even AI-native features like: Semantic ranking in SQL: We can prioritize search results based on relevance to the query intent. Natural language support: Our future interfaces will let guests search the way they speak – no more rigid filters or dropdowns. AlloyDB features state-of-the-art models and natural language in addition to the state-of-the-art ScaNN vector index. Google’s commitment and leadership in AI infused in AlloyDB has given us the confidence to evolve our service together with pace of the overall AI & data landscape. The next aisle over: What's ahead for Target Search at Target is evolving into something far more dynamic – an intelligent, multimodal layer that helps guests connect with what they need, when and how they need it. As our guests engage across devices, languages, and formats, we want their experience to feel seamless and smart. With AlloyDB AI and Google Cloud’s rapidly evolving data and AI stack, we’re confident in our ability to stay ahead of guest expectations and deliver more personalized, delightful shopping moments every day. Note from Amit Ganesh, VP of Engineering at Google Cloud : Target’s journey is a powerful example of how enterprises are already transforming search experiences using AlloyDB AI. As Vishal described, filtered vector search is unlocking new levels of relevance and scale. At Google Cloud, we’re continuing to expand the capabilities of AlloyDB AI to support even more intelligent, agent-driven, multimodal applications. Here’s what’s new: Agentspace integration: Developers can now build AI agents that query AlloyDB in real time, combining structured data with natural language reasoning. AlloyDB natural language: Applications can securely query structured data using plain English (or French, or 250+ other languages) backed by interactive disambiguation and strong privacy controls. Enhanced vector support: With AlloyDB’s ScaNN index and adaptive query filtering, vector search with filters now performs up to 10x faster. AI query engine: SQL developers can use natural language expressions to embed Gemini model reasoning directly into queries Three new models: AlloyDB AI now supports Gemini’s text embedding model, a cross-attention reranker, and a multimodal model that brings vision and text into a shared vector space. These capabilities are designed to accelerate innovation – whether you’re improving product discovery like Target or building new agent-based interfaces from the ground up. Learn more: Google Cloud databases supercharge the AI developer experience AlloyDB AI drives innovation for application developers Discover how AlloyDB combines the best of PostgreSQL with the power of Google Cloud in our latest e-book. Try AlloyDB at no cost for 30 days with AlloyDB free trial clusters! Learn more about AlloyDB for PostgreSQL.

The promise of Google Kubernetes Engine (GKE) is the power of Kubernetes with ease of management, including planning and creating clusters, deploying and managing applications, configuring networking, ensuring security, and scaling workloads. However, when it comes to autoscaling workloads, customers tell us the fully managed mode of operation, GKE Autopilot, hasn’t always delivered the speed and efficiency they need. That’s because autoscaling a Kubernetes cluster involves creating and adding new nodes, which can sometimes take several minutes. That’s just not good enough for high-volume, fast-scale applications. Enter the container-optimized compute platform for GKE Autopilot, a completely reimagined autoscaling stack for GKE that we introduced earlier this year. In this blog, we take a deeper look at autoscaling in GKE Autopilot, and how to start using the new container-optimized compute platform for your workloads today. Understanding GKE Autopilot and its scaling challenges With the fully managed version of Kubernetes, GKE Autopilot users are primarily responsible for their applications, while GKE takes on the heavy lifting of managing nodes and nodepools, creating new nodes, and scaling applications. With traditional Autopilot, if an application needed to scale quickly, GKE first needed to provision new nodes onto which the application could scale, which sometimes took several minutes. To circumvent this, users often employed techniques like "balloon pods" — creating dummy pods with low priority to hold onto nodes; this helped ensure immediate capacity for demanding scaling use cases. However, this approach is costly, as it involves holding onto actively unused resources, and is also difficult to maintain. aside_block <ListValue: [StructValue([('title', '$300 in free credit to try Google Cloud containers and Kubernetes'), ('body', <wagtail.rich_text.RichText object at 0x3e9751278a30>), ('btn_text', ''), ('href', ''), ('image', None)])]> Introducing the container-optimized compute platform We developed the container-optimized compute platform with a clear mission: to provide you with near-real-time, vertically and horizontally scalable compute capacity precisely when you need it, at optimal price and performance. We achieved this through a fundamental redesign of GKE’s underlying compute stack. The container-optimized compute platform runs GKE Autopilot nodes on a new family of virtual machines that can be dynamically resized while they are running, from fractions of a CPU, all without disrupting workloads. To improve the speed of scaling and resizing, GKE clusters now also maintain a pool of dedicated pre-provisioned compute capacity that can be automatically allocated for workloads in response to increased resource demands. More importantly, given that with GKE Autopilot, you only pay for the compute capacity that you requested, this pre-provisioned capacity does not impact your bill. The result is a flexible compute that provides capacity where and when it's required. Key improvements include: Up to 7x faster pod scheduling time compared to clusters without container-optimized compute Significantly improved application response times for applications with autoscaling enabled Introduction of in-place pod resize in Kubernetes 1.33, allowing for pod resizing without disruption The container-optimized compute platform also includes pre-enabled high-performance Horizontal Pod Autoscaler (HPA) profile, which delivers: Highly consistent horizontal scaling reaction times Up to 3x faster HPA calculations Higher resolution metrics, leading to improved scheduling decisions Accelerated performance for up to 1000 HPA objects All these features are now available out of the box in GKE Autopilot 1.32 or later. The power of the new platform is evident in demonstrations where replica counts are rapidly scaled, showcasing how quickly new pods get scheduled. How to leverage container-optimized compute To benefit from these improvements in GKE Autopilot, simply create a new GKE Autopilot cluster based on GKE Autopilot 1.32 or later. code_block <ListValue: [StructValue([('code', 'gcloud container clusters create-auto <cluster_name> \rn --location=<region> \rn --project=<project_id>'), ('language', ''), ('caption', <wagtail.rich_text.RichText object at 0x3e97542761c0>)])]> If your existing cluster is on an older version, upgrade it to 1.32 or newer to benefit from container-optimized compute platform’s new features offered. To optimize performance, we recommend that you utilize the general purpose compute class for your workload. While the container-optimized compute platform supports various types of workloads, it works best with services that require gradual scaling and small (2 CPU or less) resource requests like web applications. While the container-optimized compute platform is versatile, it is not currently suitable for specific deployment types: One-pod-per-node deployments, such as anti-affinity situations Batch workloads The container-optimized compute platform marks a significant leap forward in improving application autoscaling within GKE and will unlock more capabilities in the future. We encourage you to try it out today in GKE Autopilot. Container-Optimized Compute Platform for GKE Autopilot

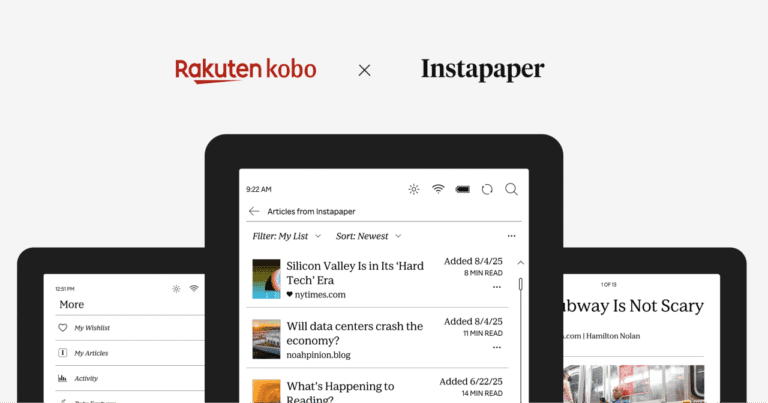

A big draw of Kobo e-readers has always been Pocket integration. But, when Mozilla announced in late May that it was shutting down the read-it-later app in July, the Kobo community was left in a bit of a lurch. To the company’s credit, in late July, it announced that it would be replacing Pocket with […]

Fans are accusing Will Smith of sharing an AI-generated video from his tour, but the truth isn't that simple.

Fubo is launching a new Sports plan on September 2nd that costs $55.99 per month and comes with over 20 live sports and news-focused channels, including the ESPN and Fox Sports channels, as well as local stations owned by ABC, CBS, and Fox. Where available, this package includes coverage for network TV-broadcast pro and college […]

This week, Netflix shared a few more details about its budding location business: The company will open its very first Netflix House in Philadelphia on November 11th, with a second location set to open in Dallas on December 11th. Both locations will span across 100,000 square feet, and offer ticketed experiences related to Netflix franchises […]

Academics once loved Twitter—but in the age of X they’ve abandoned it in droves.