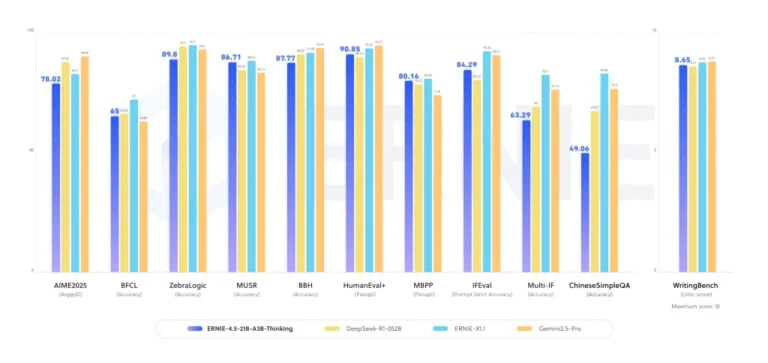

Baidu Releases ERNIE-4.5-21B-A3B-Thinking: A Compact MoE Model for Deep Reasoning

Baidu AI Research team has just released ERNIE-4.5-21B-A3B-Thinking, a new reasoning-focused large language model designed around efficiency, long-context reasoning, and tool integration. Being part of the ERNIE-4.5 family, this model is a Mixture-of-Experts (MoE) architecture with 21B total parameters but only 3B active parameters per token, making it computationally efficient while maintaining competitive reasoning capability. […] The post Baidu Releases ERNIE-4.5-21B-A3B-Thinking: A Compact MoE Model for Deep Reasoning appeared first on MarkTechPost.