3 Questions: The pros and cons of synthetic data in AI

Artificially created data offer benefits from cost savings to privacy preservation, but their limitations require careful planning and evaluation, Kalyan Veeramachaneni says.

Artificially created data offer benefits from cost savings to privacy preservation, but their limitations require careful planning and evaluation, Kalyan Veeramachaneni says.

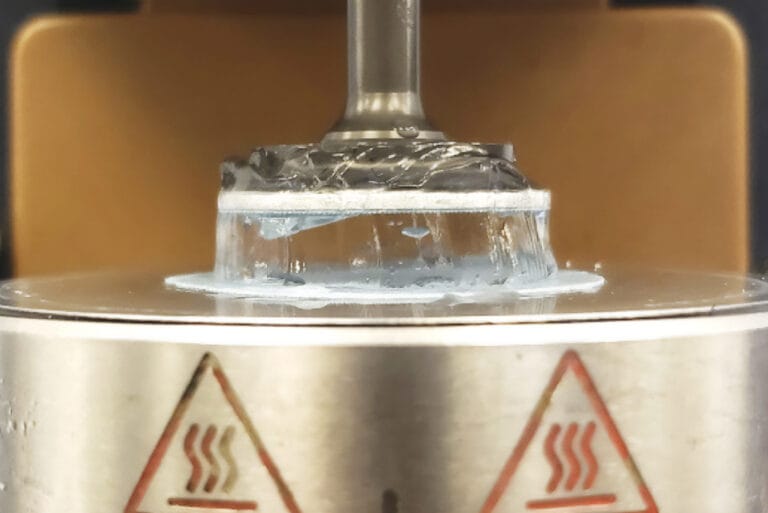

New findings could help manufacturers design gels, lotions, or even paving materials that last longer and perform more predictably.

The Pentagon says the move will save money, but acknowledges risk to military readiness.

arXiv:2507.09128v2 Announce Type: replace Abstract: A modern paradigm for generalization in machine learning and AI consists of pre-training a task-agnostic foundation model, generally obtained using self-supervised and multimodal contrastive learning. The resulting representations can be used for prediction on a downstream task for which no labeled data is available. We present a theoretical framework to better understand this approach, called zero-shot prediction. We identify the target quantities that zero-shot prediction aims to learn, or learns in passing, and the key conditional independence relationships that enable its generalization ability.

arXiv:2509.00941v1 Announce Type: cross Abstract: Langevin Monte Carlo (LMC) algorithms are popular Markov Chain Monte Carlo (MCMC) methods to sample a target probability distribution, which arises in many applications in machine learning. Inspired by regime-switching stochastic differential equations in the probability literature, we propose and study regime-switching Langevin dynamics (RS-LD) and regime-switching kinetic Langevin dynamics (RS-KLD). Based on their discretizations, we introduce regime-switching Langevin Monte Carlo (RS-LMC) and regime-switching kinetic Langevin Monte Carlo (RS-KLMC) algorithms, which can also be viewed as LMC and KLMC algorithms with random stepsizes. We also propose frictional-regime-switching kinetic Langevin dynamics (FRS-KLD) and its associated algorithm frictional-regime-switching kinetic Langevin Monte Carlo (FRS-KLMC), which can also be viewed as the KLMC algorithm with random frictional coefficients. We provide their 2-Wasserstein non-asymptotic convergence guarantees to the target distribution, and analyze the iteration complexities. Numerical experiments using both synthetic and real data are provided to illustrate the efficiency of our proposed algorithms.

arXiv:2411.12965v2 Announce Type: replace Abstract: Nearest neighbor (NN) algorithms have been extensively used for missing data problems in recommender systems and sequential decision-making systems. Prior theoretical analysis has established favorable guarantees for NN when the underlying data is sufficiently smooth and the missingness probabilities are lower bounded. Here we analyze NN with non-smooth non-linear functions with vast amounts of missingness. In particular, we consider matrix completion settings where the entries of the underlying matrix follow a latent non-linear factor model, with the non-linearity belonging to a Holder function class that is less smooth than Lipschitz. Our results establish following favorable properties for a suitable two-sided NN: (1) The mean squared error (MSE) of NN adapts to the smoothness of the non-linearity, (2) under certain regularity conditions, the NN error rate matches the rate obtained by an oracle equipped with the knowledge of both the row and column latent factors, and finally (3) NN's MSE is non-trivial for a wide range of settings even when several matrix entries might be missing deterministically. We support our theoretical findings via extensive numerical simulations and a case study with data from a mobile health study, HeartSteps.

arXiv:2509.00923v1 Announce Type: cross Abstract: Monte Carlo Counterfactual Regret Minimization (MCCFR) has emerged as a cornerstone algorithm for solving extensive-form games, but its integration with deep neural networks introduces scale-dependent challenges that manifest differently across game complexities. This paper presents a comprehensive analysis of how neural MCCFR component effectiveness varies with game scale and proposes an adaptive framework for selective component deployment. We identify that theoretical risks such as nonstationary target distribution shifts, action support collapse, variance explosion, and warm-starting bias have scale-dependent manifestation patterns, requiring different mitigation strategies for small versus large games. Our proposed Robust Deep MCCFR framework incorporates target networks with delayed updates, uniform exploration mixing, variance-aware training objectives, and comprehensive diagnostic monitoring. Through systematic ablation studies on Kuhn and Leduc Poker, we demonstrate scale-dependent component effectiveness and identify critical component interactions. The best configuration achieves final exploitability of 0.0628 on Kuhn Poker, representing a 60% improvement over the classical framework (0.156). On the more complex Leduc Poker domain, selective component usage achieves exploitability of 0.2386, a 23.5% improvement over the classical framework (0.3703) and highlighting the importance of careful component selection over comprehensive mitigation. Our contributions include: (1) a formal theoretical analysis of risks in neural MCCFR, (2) a principled mitigation framework with convergence guarantees, (3) comprehensive multi-scale experimental validation revealing scale-dependent component interactions, and (4) practical guidelines for deployment in larger games.

arXiv:2307.12022v2 Announce Type: replace Abstract: In real-world healthcare settings, treatment decisions often involve optimizing for multivariate outcomes such as treatment efficacy and severity of side effects based on individual preferences. However, existing statistical methods for estimating dynamic treatment regimes (DTRs) usually assume a univariate outcome, and the few methods that deal with composite outcomes suffer from limitations such as restrictions to a single time point and limited theoretical guarantees. To address these limitations, we propose Latent Utility Q-Learning (LUQ-Learning), a latent model approach that adapts Q-learning to tackle the aforementioned difficulties. Our framework allows for an arbitrary finite number of decision points and outcomes, incorporates personal preferences, and achieves asymptotic performance guarantees with realistic assumptions. We conduct simulation experiments based on an ongoing trial for low back pain as well as a well-known trial for schizophrenia. In both settings, LUQ-Learning achieves highly competitive performance compared to alternative baselines.

arXiv:2509.00753v1 Announce Type: cross Abstract: The FBMS R package facilitates Bayesian model selection and model averaging in complex regression settings by employing a variety of Monte Carlo model exploration methods. At its core, the package implements an efficient Mode Jumping Markov Chain Monte Carlo (MJMCMC) algorithm, designed to improve mixing in multi-modal posterior landscapes within Bayesian generalized linear models. In addition, it provides a genetically modified MJMCMC (GMJMCMC) algorithm that introduces nonlinear feature generation, thereby enabling the estimation of Bayesian generalized nonlinear models (BGNLMs). Within this framework, the algorithm maintains and updates populations of transformed features, computes their posterior probabilities, and evaluates the posteriors of models constructed from them. We demonstrate the effective use of FBMS for both inferential and predictive modeling in Gaussian regression, focusing on different instances of the BGNLM class of models. Furthermore, through a broad set of applications, we illustrate how the methodology can be extended to increasingly complex modeling scenarios, extending to other response distributions and mixed effect models.

arXiv:2509.02538v1 Announce Type: cross Abstract: In federated learning, communication cost can be significantly reduced by transmitting the information over the air through physical channels. In this paper, we propose a new class of adaptive federated stochastic gradient descent (SGD) algorithms that can be implemented over physical channels, taking into account both channel noise and hardware constraints. We establish theoretical guarantees for the proposed algorithms, demonstrating convergence rates that are adaptive to the stochastic gradient noise level. We also demonstrate the practical effectiveness of our algorithms through simulation studies with deep learning models.